Why AI Forgets Everything You Tell It (And How to Fix It in 2025)

I was 20 minutes into debugging with claude, explained my (architecture, database schema, 3 different approaches) and just when the AI finally understood my problem, the session timed out and it forgot everything

This happens 5-10 times a week to every developer using AI tools, and it's not a bug, it's how AI is designed.

🧠 Why AI Has No Memory

AI has perfect semantic memory (facts like “JavaScript is single-threaded”) but zero episodic memory (personal history like “we ditched MongoDB last week”). Every chat is stateless - the AI processes your prompt, responds, and forgets.

The only “memory” it has is its context window - a temporary scratchpad. Even with million-token windows, three issues remain:

- Session amnesia: Start a new chat, empty window, your AI forgets everything again

- Speed penalty: Loading 750,000 words can take minutes, breaking conversational flow

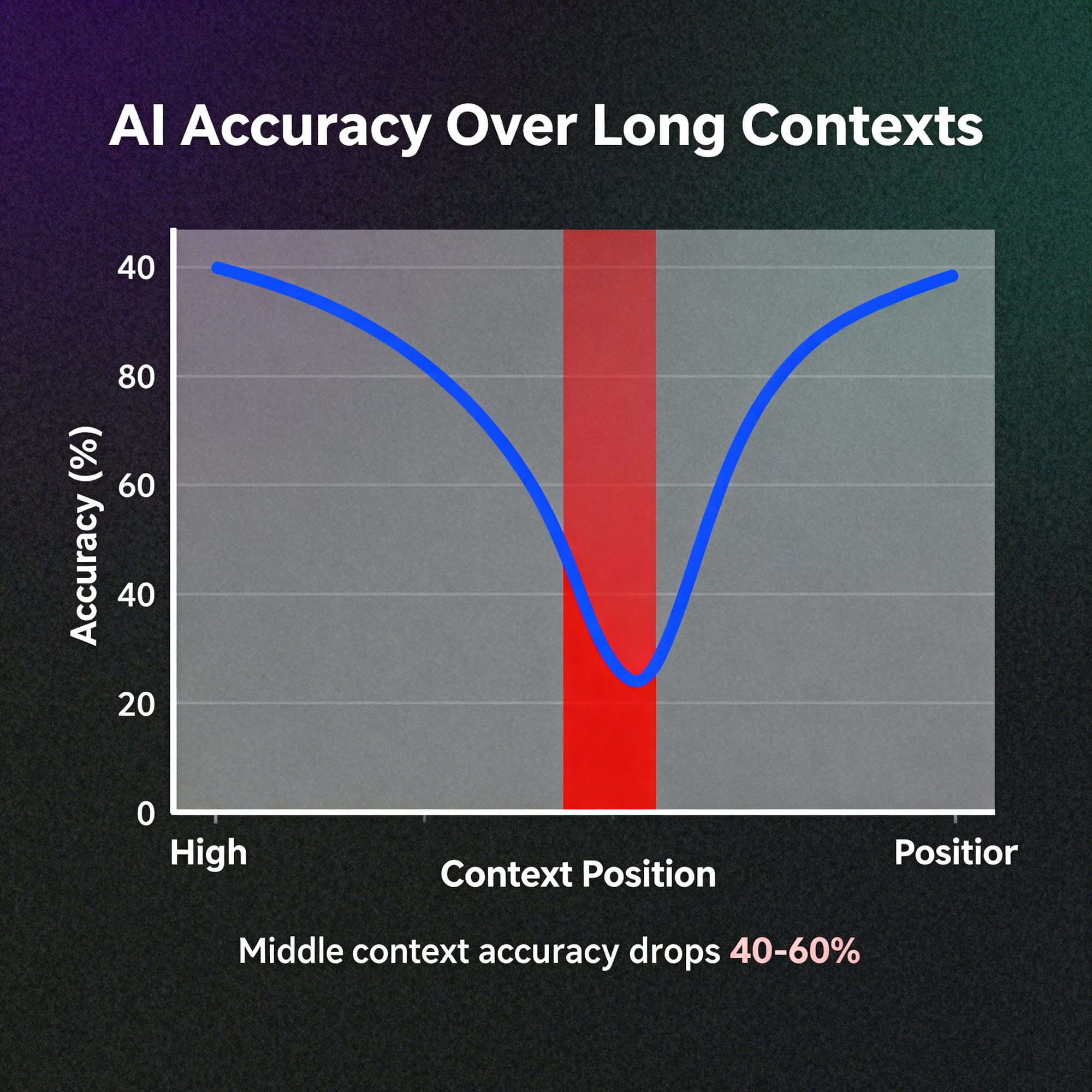

- Lost in the middle: Research shows AI accuracy drops 40-60% for information buried in the middle of long contexts, the models remember the start and end but can't find that one specific fact from paragraph 247

So when ChatGPT or Claude feels “aware,” it’s not memory, it’s an illusion.

❌ How People Work Around It

Right now, you're the AI's memory, copying summaries from previous chats, maintaining a "profile" document you paste at every conversation start, re-explaining your architecture every time you switch from one chatgpt to gemini to claude to cursor.

You lose about 15+ hours a month managing AI amnesia.

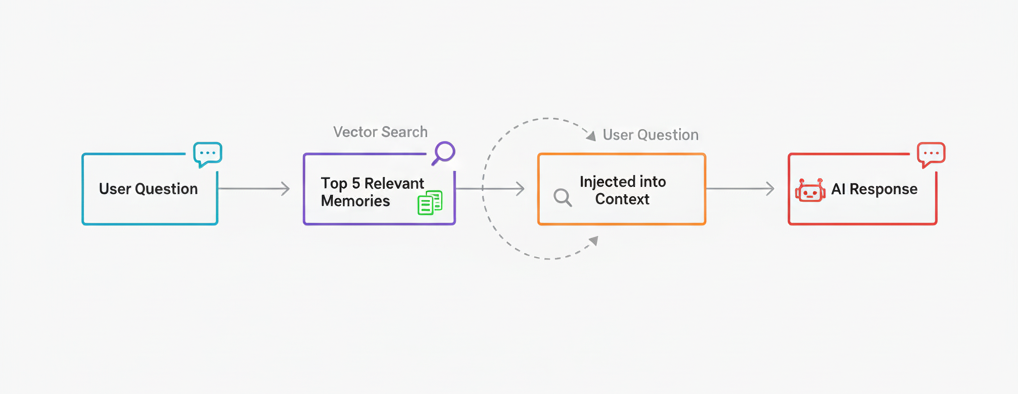

🔍 The Industry Fix: RAG

Retrieval-Augmented Generation stores old conversations as vectors and fetches relevant ones later. It’s like giving AI a cheat sheet, useful for recalling facts, but bad at narrative or time-based reasoning.

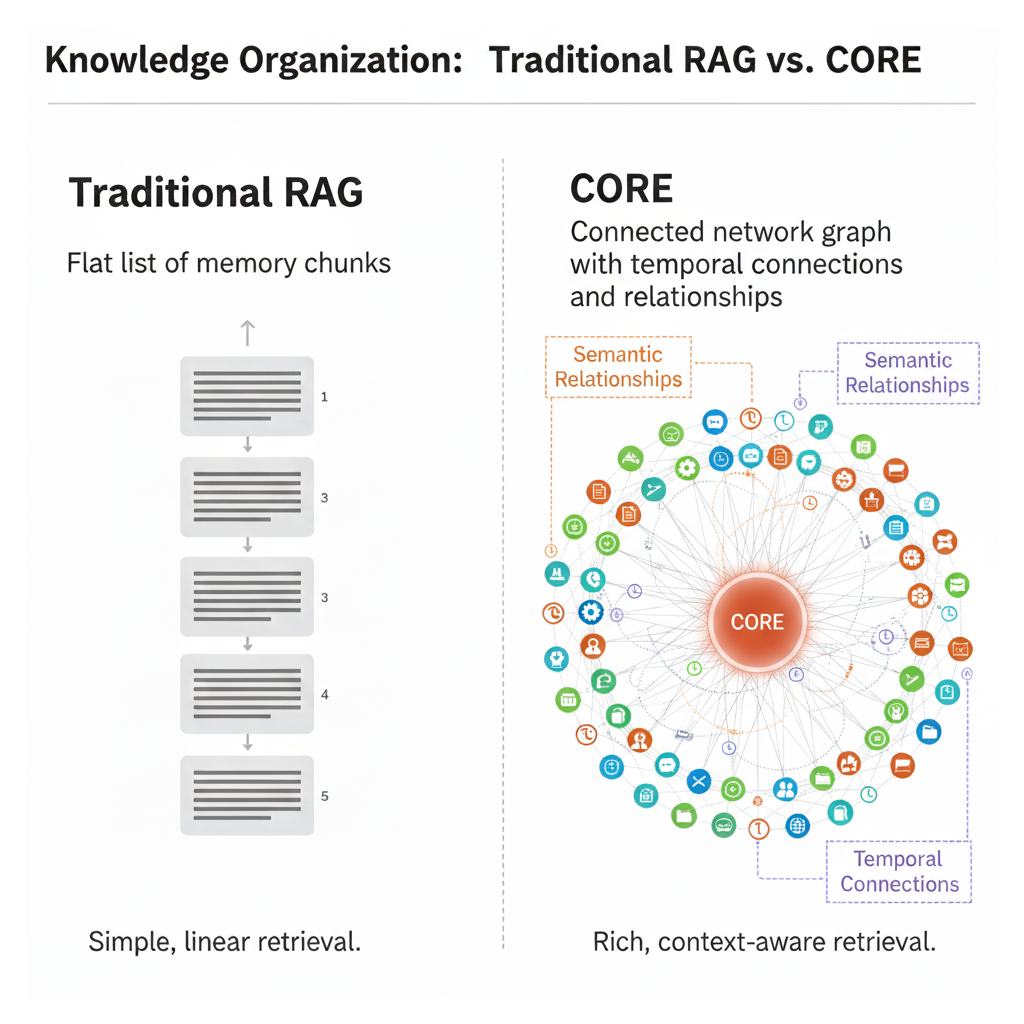

RAG knows topics, not stories. It can’t tell if your opinion changed, what came first, or how things evolved.

RAG transforms a memory problem into a search problem, the AI doesn't actually remember anything, it's just being handed a cheat sheet before every response and for simple factual recall like "What was the Q3 deadline?" it works great, but it has a fundamental limitation.

Where RAG Breaks Down

RAG matches topics, not narrative, if you complained about Product X in Week 1 ("pricing model is confusing"), praised it in Week 2 after a fix ("new pricing is great, adoption up 40%"), and ask in Week 3 "What do I think of Product X?", RAG finds both memories because both match the topic, giving you a confused answer or worse, only retrieving Week 1 (it was longer, more detailed) and incorrectly saying you hate the product.

RAG can't handle temporal reasoning (what came first, what changed), evolving context (tracking how opinions shift over time), or relationship tracking (connecting concepts discussed separately) - it finds the closest facts, not the most relevant ones.

✨ What Actually Fixes AI Memory: Temporal Knowledge Graphs

The real solution isn't just better search, it's better memory architecture:

- Temporal awareness: Memory that understands when things happened and how they evolved over time

- Relationship tracking: Connecting concepts even when discussed in different conversations or tools

- Cross-tool continuity: Memory that works across ChatGPT, Claude, Cursor, Gemini - explain once, works everywhere

- User sovereignty: Full control to view, edit, and organize what your AI remembers

CORE uses a temporal knowledge graph instead of simple vector search, every conversation becomes part of a living, connected memory that tracks when information was added, understands how concepts relate to each other, preserves the evolution of your thinking over time, and works across every AI tool you use.

Example:

You tell ChatGPT, “We increased pricing from $99 → $149 for enterprise users.”

Weeks later, you ask it, “What changed in our pricing since Q1 and why?”

RAG might return both prices with no context.

CORE returns a timeline:

“Q1: $99 → Q2: $149 (Reason: Enterprise feature demand).”

That’s not recall — that’s understanding.

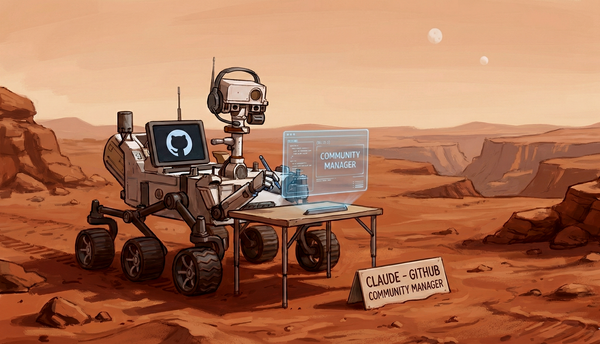

🚀 Why AI Memory Needs to Work Across All Your Tools

Your memory shouldn't be locked to one AI tool, you brainstorm in ChatGPT, code in Cursor, debug in Claude Code, and research in Gemini, but right now each tool has zero context about what happened in the others, forcing constant re - explanation.

With persistent, cross-tool memory, you can explain something once and every AI tool remembers it. Brainstorm in ChatGPT, switch to Cursor, debug in Claude — and your context follows seamlessly.

CORE hit 88.24% accuracy on the LoCoMo memory benchmark, significantly ahead of traditional RAG systems at ~61% and supports ChatGPT, Claude, Cursor, Gemini, Obsidian, Notion, and any MCP-compatible tool (900+ GitHub stars, 500k+ facts created).

CORE is open-source

We’re building CORE in public—and it’s fully open source. If you share our vision of memory that belongs to individuals, not platforms:

- ⭐ Star us on GitHub to support open source memory for individuals

- 🚀 Try CORE today:

- Quick start: Sign up at core.heysol.ai

- Self-host: Deploy on Railway

Built by developers tired of repeating themselves for developers tired of repeating themselves.

#NeverRepeat